Integrated Task and Motion Planning for Robotic Systems

Principal Investigator: Professor Lin

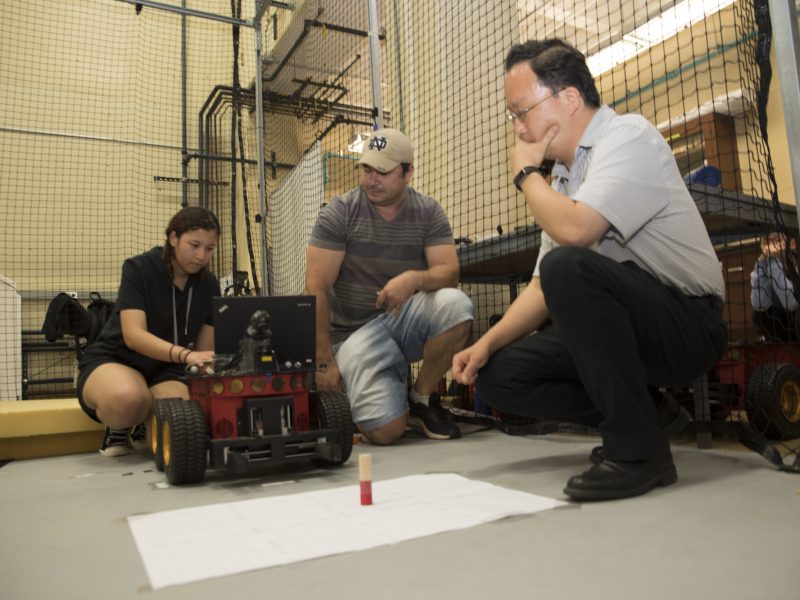

AWaRE REU Researcher: Marina Malone, Loyola University

Project Description: Intelligent physical systems capable of interpreting, planning, and executing high-level tasks requires reasoning over discrete actions and continuous motions, and that poses unique computational challenges. A recent trend in solving these challenges is integrated task and motion planning (ITMP), which proposes to synthesize both discrete task plans and continuous motion trajectories for mobile robots simultaneously. Successful intelligent autonomous robots are able to perform ITMP in uncertain environments, and that requires the ability to observe and act upon its environment to develop a plan towards accomplishing some task. Our basic idea is to use a camera for perception and integrating perception in mission and motion planning. This REU project is mainly about developing a demonstration in unmanned ground vehicle robots by combining perception and learning models with ITMP. We aim to develop a control system in which a robot receives a high-level specification on the continuous robot and object state spaces, but with unreliable prior knowledge.

Finding: In this project, the undergraduate student developed a grasping motion planning for unreliable object position using Kalman Filter, Linear Quadratic Regulator (LQR), and our novel ITMP algorithm, iterative deepening Signal Temporal Logic (idSTL). We use a monocular camera and OpenCV toolbox to estimate the object pose. The novelty on these control systems is that we integrate perception, planning, and control; thus, we can gradually increase the uncertainty of the object’s location by giving unreliable locations of the object to the robot and later obstructing it from the robot’s initial view. This would enable us to evaluate the effectiveness of the robot planning and action taking to find more information about the object it is tasked to find.